In the evolving world of artificial intelligence, the Model Context Protocol (MCP) has recently emerged as a key enabler for AI agents looking to interact with real-world tools, data sources, and applications. Often compared to “USB-C for AI,” MCP creates a consistent and secure way for large language models (LLMs) to “plug in” to various services, from document databases and version-control systems to messaging platforms and beyond.

What Is MCP?

MCP stands for Model Context Protocol. Introduced by Anthropic and quickly gaining traction, it’s an open standard designed to help AI models:

- Discover and use external tools dynamically

- Communicate securely with remote services

- Manage and share context—from database queries to file access—in a consistent way

Think of MCP as a common language that AI models speak to talk with remote servers (called “MCP servers”). These servers can expose everything from local file systems to cloud applications, which an AI agent can then query or modify without developers needing endless custom integrations.

Why Does MCP Matter?

Streamlined Integrations

Before MCP, developers often had to build ad hoc or proprietary “plugins” for each service they wanted to connect to an AI system. This meant high maintenance overhead, fragile code, and limited interoperability. MCP fixes that by establishing a simpler, standardized approach to bridging AI models with services like a Git repository, Slack workspace, or even external databases.

Model-Agnostic

A crucial feature of MCP is that it’s not tied to a single AI model. Whether you prefer Anthropic’s Claude, OpenAI’s GPT, or an open-source model, MCP can act as a universal connector. This flexibility dramatically reduces the risk of vendor lock-in and encourages a broader ecosystem of AI innovations.

Robust Ecosystem

Multiple companies—large and small—have begun to release MCP “servers” for different applications. In just a short time, there are already hundreds of community-developed connectors that extend AI agents into everything from code editors to real-time weather data. Conferences and blogs often highlight how the MCP community is growing through open-source collaboration.

Security and Governance

As AI models gain the power to modify files or call APIs, the question of safety naturally comes up. MCP provides the groundwork for integrated authentication and authorization, typically via OAuth 2.0, so organizations can rein in what an AI agent can access. This is especially useful in enterprise settings where multiple teams need different levels of data visibility.

How MCP Works

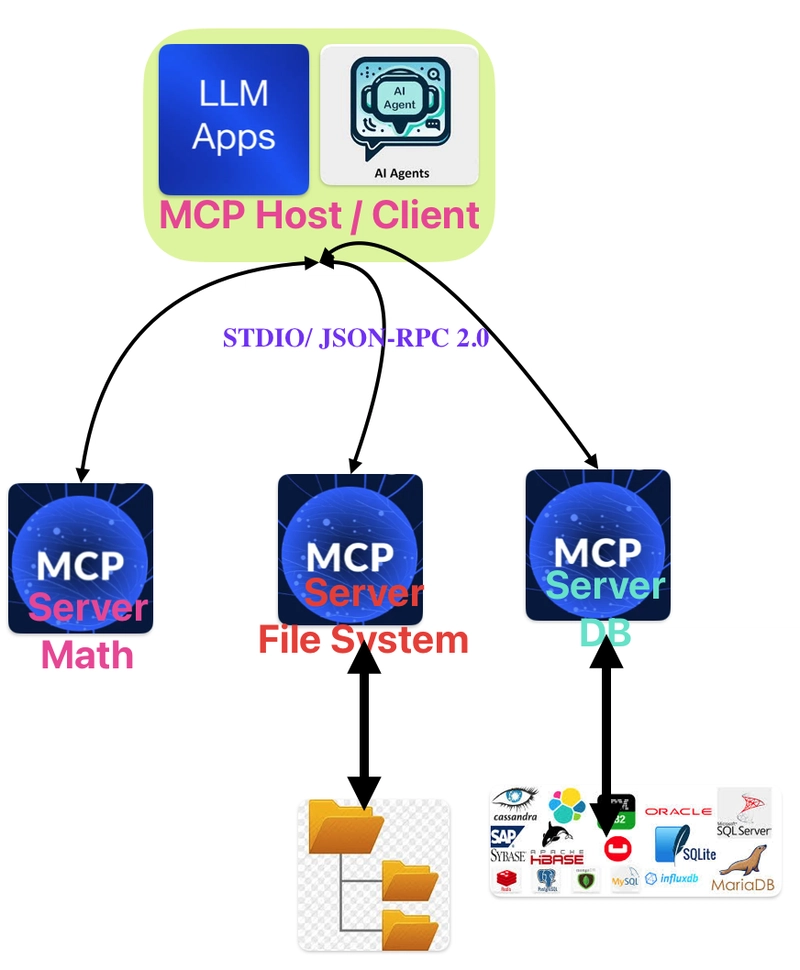

Client-Server Model

In MCP, the AI (or the application controlling the AI) is the client that sends requests, while each external service is an MCP server. When the AI needs real-world data—say, the status of an order—it queries the relevant MCP server, which performs the action and returns the result.

Unified Protocol

Both clients and servers follow the same protocol—much like how web browsers and websites follow HTTP. By following the MCP specification, third-party developers can build new services that become discoverable and easy for AI agents to incorporate.

Discovery

One of MCP’s core promises is to let an AI “discover” available tools automatically. A single AI agent might see a list of available MCP servers—like “GitOps Server,” “E-commerce Inventory Server,” or “Slack Server”—without a developer wiring each one individually. The AI can query each endpoint, see what actions and data are offered, and wield them accordingly.

Key Benefits at a Glance

- Reduced Integration Hassle: No more re-implementing similar features for every service

- Vendor-Neutral: Supports multiple AI models and frameworks

- Scalability: Agents can dynamically add or remove new tools as organizations evolve

- Security: Built-in support for secure authentication and robust permissioning

Emerging Use Cases

Enterprise Workflows

Companies can unify messaging, file storage, and on-premises databases under the same protocol, while their AI agent orchestrates tasks and automates repetitive steps.

Developer Tools

Plugins for GitHub or GitLab let AI agents review code, open pull requests, and build continuous integration pipelines without custom-coded integrations.

Data Analytics

AI models can fetch real-time data from multiple sources—like enterprise Data Lakes or public APIs—to produce live dashboards and reports.

Looking Ahead

As the AI landscape moves steadily toward agentic workflows—where AI agents autonomously combine and execute tasks—MCP’s open and collaborative ethos stands out. By simplifying how AI agents connect with the wider ecosystem of services, MCP paves the way for enterprise-grade automation, innovative consumer applications, and new forms of human-computer collaboration.

Looking forward, expect:

- Remote Hosting: Major cloud providers and platform specialists are offering remote MCP support, making it easier to deploy global AI-driven applications

- Expanded Modalities: Future MCP roadmaps talk about handling not just text-based inputs, but audio, video, and image data too

- Further Standardization: Discussions of internationally recognized standards bodies adopting MCP components indicate a push to make it a widely accepted protocol across the industry

Final Thoughts

MCP might still be considered new, but its momentum is unquestionable. As an open standard, it’s free from the limitations often imposed by proprietary solutions, enabling a diverse community of contributors to rapidly extend its functionalities. If your organization is betting on AI and wants to harness the latest innovation in agentic tooling, the Model Context Protocol should definitely be on your radar.